A History Of Computing Technology is a fascinating journey through time, marked by groundbreaking innovations and visionary minds, and pioneer-technology.com is here to guide you on this exciting exploration. This guide offers a comprehensive overview of the evolution of computing, from its mechanical origins to today’s cutting-edge advancements, offering insights into the future of computer history, technological breakthroughs, and digital transformation.

1. The Genesis of Computing: Mechanical Marvels

1.1. Weaving the Future: Jacquard’s Loom (1801)

The story begins not with circuits and code, but with threads and textiles. Joseph Marie Jacquard’s loom, introduced in 1801, automated fabric design using punched wooden cards. This innovation, while not a computer in the modern sense, laid the groundwork for future computing technologies by demonstrating the concept of programmable automation. It was an early foray into automation, influencing the likes of Charles Babbage.

1.2. Babbage’s Dream: The Analytical Engine (1821)

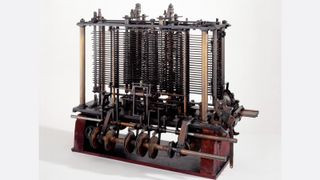

Charles Babbage Analytical Engine

Charles Babbage Analytical Engine

In 1821, Charles Babbage, a visionary English mathematician, conceived the Difference Engine, a steam-powered calculating machine, that would revolutionize computation. Although this ambitious project was never fully realized due to technological limitations, it showcased the potential of automated computation. Babbage’s later design, the Analytical Engine, envisioned a general-purpose computer with an arithmetic logic unit, control flow, and memory, earning him the title of “father of the computer”. According to the University of Minnesota, the project was funded by the British government but failed due to the lack of technology at the time.

1.3. The First Programmer: Ada Lovelace (1848)

Ada Lovelace, a brilliant mathematician and daughter of Lord Byron, etched her name in history by writing what is considered the world’s first computer program in 1848. As Anna Siffert, a professor of theoretical mathematics at the University of Münster in Germany, notes, Lovelace’s program was created while translating a paper on Babbage’s Analytical Engine. Her annotations included an algorithm to compute Bernoulli numbers, effectively making her the first computer programmer.

1.4. Printing Calculations: Scheutz’s Calculator (1853)

In 1853, Swedish inventor Per Georg Scheutz and his son Edvard designed the world’s first printing calculator. This invention marked a significant milestone as the first machine capable of computing tabular differences and printing the results. According to Uta C. Merzbach’s book, “Georg Scheutz and the First Printing Calculator,” this innovation paved the way for more sophisticated calculating devices.

1.5. Taming the Census: Hollerith’s Punch-Card System (1890)

Herman Hollerith revolutionized data processing in 1890 by designing a punch-card system to tabulate the U.S. Census. This invention significantly reduced calculation time, saving the U.S. government approximately $5 million and several years of work, according to Columbia University. Hollerith’s success led to the founding of a company that would later become International Business Machines Corporation (IBM).

2. The Dawn of the Electronic Era: Early 20th Century Innovations

2.1. Analog Computing Pioneer: Vannevar Bush (1931)

In 1931, Vannevar Bush at MIT created the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer. This invention, as noted by Stanford University, marked a significant step forward in solving complex mathematical problems and paved the way for future advancements in computing technology.

2.2. The Turing Machine: Alan Turing’s Vision (1936)

Alan Turing, a British scientist and mathematician, laid the theoretical foundation for modern computers in 1936 with his concept of the Turing machine. This universal machine, capable of computing anything that is computable, became the cornerstone of computer science. Turing also played a crucial role in developing the Turing-Welchman Bombe, an electro-mechanical device used to decipher Nazi codes during World War II, according to the UK’s National Museum of Computing. As Chris Bernhardt notes in “Turing’s Vision,” Turing’s ideas are central to the modern computer.

2.3. The First Electronic Computer: Atanasoff-Berry Computer (1937)

John Vincent Atanasoff, a professor at Iowa State University, embarked on a groundbreaking project in 1937 to build the first electric-only computer. This innovation, free of gears, cams, belts, and shafts, represented a significant leap forward in computing technology.

2.4. Hewlett Packard’s Humble Beginnings (1939)

In 1939, David Packard and Bill Hewlett founded the Hewlett Packard Company in Palo Alto, California. Their first headquarters was in Packard’s garage, a location now considered the “birthplace of Silicon Valley”, according to MIT. The company’s name was decided by a coin toss.

2.5. Zuse’s Z3: The First Digital Computer (1941)

German inventor Konrad Zuse completed the Z3 in 1941, the world’s earliest digital computer. This machine, unfortunately, was destroyed during a bombing raid on Berlin during World War II. According to Gerard O’Regan’s book, “A Brief History of Computing,” Zuse later released the world’s first commercial digital computer, the Z4, in 1950.

2.6. ABC: The First Digital Electronic Computer in the U.S. (1941)

In 1941, Atanasoff and his graduate student, Clifford Berry, designed the first digital electronic computer in the U.S., called the Atanasoff-Berry Computer (ABC). This computer was the first to store information on its main memory and could perform one operation every 15 seconds, according to “Birthing the Computer.”

2.7. ENIAC: The Electronic Numerical Integrator and Calculator (1945)

John Mauchly and J. Presper Eckert at the University of Pennsylvania designed and built the Electronic Numerical Integrator and Calculator (ENIAC) in 1945. This machine was the first “automatic, general-purpose, electronic, decimal, digital computer,” according to Edwin D. Reilly’s book, “Milestones in Computer Science and Information Technology”.

2.8. UNIVAC: The First Commercial Computer (1946)

In 1946, Mauchly and Presper left the University of Pennsylvania and received funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

2.9. The Transistor Revolution (1947)

William Shockley, John Bardeen, and Walter Brattain of Bell Laboratories invented the transistor in 1947. This invention, which allowed for the creation of an electric switch using solid materials, revolutionized electronics by replacing bulky vacuum tubes.

2.10. EDSAC: The First Practical Stored-Program Computer (1949)

A team at the University of Cambridge developed the Electronic Delay Storage Automatic Calculator (EDSAC) in 1949, “the first practical stored-program computer,” according to O’Regan. The EDSAC ran its first program in May 1949, calculating a table of squares and a list of prime numbers.

3. The Rise of Personal Computing: Late 20th Century

3.1. COBOL: The First Computer Language (1953)

Grace Hopper developed the first computer language, which eventually became known as COBOL (COmmon, Business-Oriented Language), in 1953, according to the National Museum of American History. Hopper was later dubbed the “First Lady of Software” in her posthumous Presidential Medal of Freedom citation.

3.2. FORTRAN: Formula Translation (1954)

In 1954, John Backus and his team of programmers at IBM published a paper describing their newly created FORTRAN programming language, an acronym for FORmula TRANslation, according to MIT.

3.3. The Integrated Circuit: The Computer Chip (1958)

Jack Kilby and Robert Noyce unveiled the integrated circuit, known as the computer chip, in 1958. Kilby was later awarded the Nobel Prize in Physics for his work.

3.4. Engelbart’s Vision: The Modern Computer Prototype (1968)

First Computer Mouse

First Computer Mouse

Douglas Engelbart revealed a prototype of the modern computer at the Fall Joint Computer Conference in San Francisco in 1968. His presentation, called “A Research Center for Augmenting Human Intellect,” included a live demonstration of his computer, featuring a mouse and a graphical user interface (GUI), according to the Doug Engelbart Institute.

3.5. UNIX: The Operating System Revolution (1969)

Ken Thompson, Dennis Ritchie, and a group of other developers at Bell Labs produced UNIX in 1969, an operating system that made “large-scale networking of diverse computing systems — and the internet — practical,” according to Bell Labs.

3.6. DRAM: The First Dynamic Access Memory Chip (1970)

The newly formed Intel unveiled the Intel 1103, the first Dynamic Access Memory (DRAM) chip, in 1970.

3.7. The Floppy Disk: Data Sharing Made Easy (1971)

A team of IBM engineers led by Alan Shugart invented the “floppy disk” in 1971, enabling data to be shared among different computers.

3.8. Magnavox Odyssey: The First Home Game Console (1972)

Ralph Baer, a German-American engineer, released Magnavox Odyssey, the world’s first home game console, in September 1972, according to the Computer Museum of America. Months later, entrepreneur Nolan Bushnell and engineer Al Alcorn with Atari released Pong, the world’s first commercially successful video game.

3.9. Ethernet: Connecting Computers (1973)

Robert Metcalfe, a member of the research staff for Xerox, developed Ethernet for connecting multiple computers and other hardware in 1973.

3.10. The Altair 8080: The First Minicomputer Kit (1975)

The January issue of “Popular Electronics” highlighted the Altair 8080 as the “world’s first minicomputer kit to rival commercial models” in 1975. Paul Allen and Bill Gates offered to write software for the Altair, using the new BASIC language. On April 4, after the success of this first endeavor, the two childhood friends formed their own software company, Microsoft.

3.11. Apple Computer: A New Era Begins (1976)

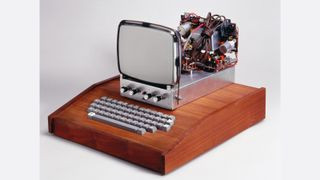

Apple I Computer

Apple I Computer

Steve Jobs and Steve Wozniak co-founded Apple Computer on April Fool’s Day in 1976. They unveiled Apple I, the first computer with a single-circuit board and ROM (Read Only Memory), according to MIT.

3.12. Commodore PET: The Personal Electronic Transactor (1977)

The Commodore Personal Electronic Transactor (PET) was released onto the home computer market in 1977, featuring an MOS Technology 8-bit 6502 microprocessor, which controls the screen, keyboard, and cassette player. The PET was especially successful in the education market, according to O’Regan.

3.13. Radio Shack TRS-80: The “Trash 80” (1977)

Radio Shack began its initial production run of 3,000 TRS-80 Model 1 computers — disparagingly known as the “Trash 80” — priced at $599 in 1977, according to the National Museum of American History. Within a year, the company took 250,000 orders for the computer, according to the book “How TRS-80 Enthusiasts Helped Spark the PC Revolution” (The Seeker Books, 2007).

3.14. The Apple II: Color Graphics and Audio Cassette Drive (1977)

The first West Coast Computer Faire was held in San Francisco in 1977. Jobs and Wozniak presented the Apple II computer at the Faire, which included color graphics and featured an audio cassette drive for storage.

3.15. VisiCalc: The First Computerized Spreadsheet Program (1978)

VisiCalc, the first computerized spreadsheet program, was introduced in 1978.

3.16. WordStar: The First Commercially Successful Word Processor (1979)

MicroPro International, founded by software engineer Seymour Rubenstein, released WordStar, the world’s first commercially successful word processor, in 1979. WordStar was programmed by Rob Barnaby and included 137,000 lines of code, according to Matthew G. Kirschenbaum’s book, “Track Changes: A Literary History of Word Processing” (Harvard University Press, 2016).

3.17. IBM Acorn: The First Personal Computer (1981)

IBM Acorn Computer

IBM Acorn Computer

“Acorn,” IBM’s first personal computer, was released onto the market at a price point of $1,565 in 1981, according to IBM. Acorn used the MS-DOS operating system from Microsoft.

3.18. Apple Lisa: The GUI Pioneer (1983)

The Apple Lisa, standing for “Local Integrated Software Architecture” but also the name of Steve Jobs’ daughter, according to the National Museum of American History (NMAH), was the first personal computer to feature a GUI in 1983.

3.19. Apple Macintosh: A Revolutionary Launch (1984)

The Apple Macintosh was announced to the world during a Super Bowl advertisement in 1984. The Macintosh was launched with a retail price of $2,500, according to the NMAH.

3.20. Microsoft Windows: The GUI Response (1985)

As a response to the Apple Lisa’s GUI, Microsoft released Windows in November 1985, the Guardian reported.

3.21. The World Wide Web: Tim Berners-Lee’s Proposal (1989)

Tim Berners-Lee, a British researcher at CERN, submitted his proposal for what would become the World Wide Web in 1989. His paper detailed his ideas for Hyper Text Markup Language (HTML), the building blocks of the Web.

3.22. Pentium: Advancing Graphics and Music (1993)

The Pentium microprocessor advanced the use of graphics and music on PCs in 1993.

3.23. Google Search Engine: A Stanford Creation (1996)

Sergey Brin and Larry Page developed the Google search engine at Stanford University in 1996.

3.24. Microsoft Invests in Apple (1997)

Microsoft invested $150 million in Apple in 1997, which at the time was struggling financially. This investment ended an ongoing court case in which Apple accused Microsoft of copying its operating system.

3.25. Wi-Fi: Wireless Fidelity (1999)

Wi-Fi, the abbreviated term for “wireless fidelity,” was developed in 1999, initially covering a distance of up to 300 feet (91 meters), Wired reported.

4. The 21st Century: Innovations and Breakthroughs

4.1. Mac OS X: A New Operating System (2001)

Mac OS X, later renamed OS X then simply macOS, was released by Apple as the successor to its standard Mac Operating System in 2001. OS X went through 16 different versions, each with “10” as its title, and the first nine iterations were nicknamed after big cats, with the first being codenamed “Cheetah,” TechRadar reported.

4.2. AMD’s Athlon 64: The First 64-bit Processor (2003)

AMD’s Athlon 64, the first 64-bit processor for personal computers, was released to customers in 2003.

4.3. Mozilla Firefox 1.0: A Web Browser Challenge (2004)

The Mozilla Corporation launched Mozilla Firefox 1.0 in 2004. The Web browser was one of the first major challenges to Internet Explorer, owned by Microsoft. During its first five years, Firefox exceeded a billion downloads by users, according to the Web Design Museum.

4.4. Google Buys Android (2005)

Google bought Android, a Linux-based mobile phone operating system, in 2005.

4.5. MacBook Pro: Apple’s First Intel-Based Computer (2006)

The MacBook Pro from Apple hit the shelves in 2006. The Pro was the company’s first Intel-based, dual-core mobile computer.

4.6. Windows 7: A New Operating System (2009)

Microsoft launched Windows 7 on July 22, 2009. The new operating system featured the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles, and more, TechRadar reported.

4.7. iPad: Apple’s Flagship Tablet (2010)

Apple iPad

Apple iPad

The iPad, Apple’s flagship handheld tablet, was unveiled in 2010.

4.8. Chromebook: Google’s Chrome OS (2011)

Google released the Chromebook, which runs on Google Chrome OS, in 2011.

4.9. Apple Watch and Windows 10 (2015)

Apple released the Apple Watch and Microsoft released Windows 10 in 2015.

4.10. The First Reprogrammable Quantum Computer (2016)

The first reprogrammable quantum computer was created in 2016. “Until now, there hasn’t been any quantum-computing platform that had the capability to program new algorithms into their system. They’re usually each tailored to attack a particular algorithm,” said study lead author Shantanu Debnath, a quantum physicist and optical engineer at the University of Maryland, College Park.

4.11. DARPA’s Molecular Informatics Program (2017)

The Defense Advanced Research Projects Agency (DARPA) is developing a new “Molecular Informatics” program that uses molecules as computers. “Chemistry offers a rich set of properties that we may be able to harness for rapid, scalable information storage and processing,” Anne Fischer, program manager in DARPA’s Defense Sciences Office, said in a statement.

4.12. Google Achieves Quantum Supremacy (2019)

A team at Google became the first to demonstrate quantum supremacy in 2019 — creating a quantum computer that could feasibly outperform the most powerful classical computer. The described the computer, dubbed “Sycamore” in a paper that same year in the journal Nature.

4.13. Frontier: The First Exascale Supercomputer (2022)

The first exascale supercomputer, and the world’s fastest, Frontier, went online at the Oak Ridge Leadership Computing Facility (OLCF) in Tennessee in 2022. Built by Hewlett Packard Enterprise (HPE) at the cost of $600 million, Frontier uses nearly 10,000 AMD EPYC 7453 64-core CPUs alongside nearly 40,000 AMD Radeon Instinct MI250X GPUs.

5. Understanding the Technological Timeline

The history of computing technology is a vast and complex topic, but it can be broken down into several key periods. Each period is characterized by its own unique technologies and trends. The evolution of computer technology timeline:

| Period | Key Technologies | Notable Trends |

|---|---|---|

| Mechanical Era | Gears, levers, punch cards | Automation of calculations, early programming concepts |

| Vacuum Tube Era | Vacuum tubes, relays | Large-scale computing, electronic digital computers |

| Transistor Era | Transistors, magnetic cores | Smaller, faster, more reliable computers |

| Integrated Circuit Era | Integrated circuits, semiconductors | Miniaturization, increased processing power |

| Microprocessor Era | Microprocessors, personal computers | Mass adoption of computing, software development |

| Modern Era | AI, quantum computing, cloud computing | Ubiquitous computing, data-driven innovation |

6. The Crucial Role of Key Figures

6.1. Charles Babbage

Dubbed the “father of the computer”, his inventions, like the Analytical Engine, showcased the potential of automated computation.

6.2. Ada Lovelace

The first computer programmer, her notes on Babbage’s Analytical Engine included an algorithm to compute Bernoulli numbers.

6.3. Alan Turing

His concept of the Turing machine laid the theoretical foundation for modern computers.

6.4. John Vincent Atanasoff

Designed the first digital electronic computer in the U.S., the Atanasoff-Berry Computer (ABC).

6.5. John Mauchly and J. Presper Eckert

Designed and built the Electronic Numerical Integrator and Calculator (ENIAC), the first automatic, general-purpose, electronic, decimal, digital computer.

6.6. William Shockley, John Bardeen, and Walter Brattain

Invented the transistor, revolutionizing electronics by replacing bulky vacuum tubes.

6.7. Grace Hopper

Developed the first computer language, COBOL, and was later dubbed the “First Lady of Software.”

6.8. Jack Kilby and Robert Noyce

Unveiled the integrated circuit, known as the computer chip.

6.9. Douglas Engelbart

Revealed a prototype of the modern computer, featuring a mouse and a graphical user interface (GUI).

6.10. Ken Thompson, Dennis Ritchie

Produced UNIX, an operating system that made large-scale networking of diverse computing systems practical.

7. The SEO Optimized List of Innovations

- Jacquard’s Loom (1801): Pioneered programmable automation.

- Babbage’s Analytical Engine (1821): Envisioned a general-purpose computer.

- Lovelace’s Algorithm (1848): The first computer program.

- Hollerith’s System (1890): Revolutionized data processing.

- Turing Machine (1936): Foundation for modern computers.

- ENIAC (1945): First electronic digital computer.

- Transistor (1947): Replaced vacuum tubes.

- COBOL (1953): First computer language.

- Integrated Circuit (1958): The computer chip.

- UNIX (1969): Made networking practical.

- Ethernet (1973): Connected computers.

- Apple II (1977): Color graphics and storage.

- IBM PC (1981): The personal computer.

- World Wide Web (1989): Revolutionized information sharing.

- Google (1996): Transformed information access.

- Wi-Fi (1999): Enabled wireless connectivity.

- Quantum Computer (2016): Advanced computing capabilities.

- Exascale Supercomputer (2022): Ushered in new era of computing.

8. What are the Search Intentions for the Keyword: A History of Computing Technology?

Understanding the search intentions behind “a history of computing technology” is crucial for providing relevant and valuable content. Here are five key search intentions:

- Informational: Users want to learn about the evolution of computing technology, key milestones, and influential figures.

- Educational: Students and researchers seek in-depth information for academic purposes, including detailed explanations of technologies and their impact.

- Historical Research: Individuals interested in historical context aim to understand how computing has shaped society, culture, and the economy.

- Technological Awareness: Professionals and enthusiasts want to stay updated on past and present technological advancements to anticipate future trends.

- Nostalgic Interest: Some users may be curious about the early days of computing, seeking to reminisce about older technologies and their personal experiences with them.

9. Why This History Matters

Understanding the history of computing technology isn’t just an academic exercise. It provides valuable insights into how far we’ve come, the challenges overcome, and the potential future directions of technology. This knowledge can inform decision-making, inspire innovation, and help us appreciate the profound impact of computing on our lives. As technology continues to advance at an exponential pace, grasping its historical context is more critical than ever.

10. The Future of Computing Technology

The story of computing technology is far from over. Quantum computing, artificial intelligence, and other emerging technologies promise to revolutionize the way we live and work. By understanding the past, we can better prepare for the future and harness the power of computing to solve some of the world’s most pressing challenges. The future of computer technology is a dynamic space that includes:

- Quantum Computing: Solving complex problems beyond classical computers.

- Artificial Intelligence: Enhancing automation and decision-making.

- Cloud Computing: Providing scalable and accessible resources.

- Nanotechnology: Creating ultra-small and efficient devices.

- Biocomputing: Merging biology with computer science.

11. FAQs About the History of Computing Technology

11.1. What is the first computer in history?

Charles Babbage’s Difference Engine, designed in the 1820s, is considered the first “mechanical” computer in history, according to the Science Museum in the U.K.

11.2. What are the five generations of computing?

The “five generations of computing” is a framework for assessing the entire history of computing and the key technological advancements throughout it. The first generation (1940s-1950s) used vacuum tubes. The second (1950s-1960s) incorporated transistors. The third (1960s-1970s) gave rise to integrated circuits. The fourth is microprocessor-based, and the fifth is AI-based computing.

11.3. What is the most powerful computer in the world?

As of November 2023, the most powerful computer in the world is the Frontier supercomputer. The machine can reach a performance level of up to 1.102 exaFLOPS.

11.4. What was the first killer app?

There is a broad consensus that VisiCalc, a spreadsheet program created by VisiCorp and originally released for the Apple II in 1979, holds that title. Steve Jobs even credits this app for propelling the Apple II to become the success it was, according to co-creator Dan Bricklin.

11.5. Who is considered the “father of the computer”?

Charles Babbage is widely regarded as the “father of the computer” for his conceptual designs of mechanical computing devices, particularly the Analytical Engine.

11.6. What was the significance of the ENIAC computer?

The ENIAC (Electronic Numerical Integrator and Computer) was significant as one of the first electronic general-purpose computers, capable of being reprogrammed to solve a wide range of computing problems.

11.7. How did the invention of the transistor impact computing?

The invention of the transistor revolutionized computing by replacing bulky and unreliable vacuum tubes, leading to smaller, faster, and more energy-efficient computers.

11.8. What role did IBM play in the history of computing?

IBM (International Business Machines) played a pivotal role in the history of computing by developing and commercializing key technologies like the IBM PC, which helped popularize personal computing.

11.9. What is Moore’s Law and how has it affected computing?

Moore’s Law, proposed by Gordon Moore, predicted that the number of transistors on a microchip would double approximately every two years, leading to exponential increases in computing power and decreases in cost.

11.10. What are some of the current trends in computing technology?

Some current trends in computing technology include artificial intelligence (AI), machine learning, quantum computing, cloud computing, and the Internet of Things (IoT), all of which are shaping the future of how we interact with technology.

12. Call To Action

Eager to explore the dynamic world of technology? Pioneer-technology.com is your ultimate resource. Discover in-depth articles, trend analyses, and the latest innovations shaping our future. Whether you’re a tech enthusiast, professional, or investor, pioneer-technology.com provides the insights you need to stay ahead.

Don’t miss out—dive into pioneer-technology.com today and transform your understanding of technology!

For more information, visit our website at pioneer-technology.com or contact us at Address: 450 Serra Mall, Stanford, CA 94305, United States. Phone: +1 (650) 723-2300.