The trends in computer science and technology nowadays are rapidly evolving, shaping various industries and aspects of our lives. At pioneer-technology.com, we are dedicated to providing you with the most up-to-date and comprehensive insights into these advancements. Discover forward-thinking tech like machine learning, cloud computing, and blockchain technology.

1. What Is Driving The Current Trends In Computer Science And Technology?

The current trends in computer science and technology are driven by several key factors, including increasing data volumes, advancements in hardware, and the growing demand for automation and efficiency. These advancements are revolutionizing how we live, work, and interact with the world around us.

- Big Data and Analytics: The exponential growth of data from various sources has created a need for advanced analytical tools.

- Increased Computing Power: The development of more powerful processors and memory systems enables complex computations and simulations.

- Demand for Automation: Industries are seeking to automate repetitive and complex tasks to improve efficiency and reduce human error.

- Cloud Computing: Provides scalable and cost-effective computing resources, enabling businesses to leverage advanced technologies without significant upfront investment.

- Globalization and Connectivity: Connects people and devices across the globe, facilitating data sharing and collaborative innovation.

2. What Are The Most Prominent Areas Of Growth In Computer Science?

Artificial intelligence, cybersecurity, and cloud computing represent some of the most prominent areas of growth in computer science today, impacting various sectors from healthcare to finance. These areas are not only expanding rapidly but also influencing other fields, leading to interdisciplinary innovations.

- Artificial Intelligence (AI): Focuses on creating machines that can perform tasks that typically require human intelligence.

- Cybersecurity: Addresses the increasing need to protect digital assets and information from cyber threats.

- Cloud Computing: Offers on-demand access to computing resources over the internet.

- Data Science and Analytics: Involves collecting, processing, and analyzing large datasets to extract meaningful insights.

- Internet of Things (IoT): Connects everyday devices to the internet, enabling them to collect and exchange data.

3. How Is Artificial Intelligence Reshaping Industries Today?

Artificial Intelligence (AI) is reshaping industries today by automating tasks, enhancing decision-making, and creating new products and services. From healthcare to finance, AI is revolutionizing how businesses operate and deliver value to their customers.

- Healthcare: AI is used for diagnosing diseases, personalizing treatment plans, and automating administrative tasks.

- Finance: AI algorithms are used for fraud detection, algorithmic trading, and customer service chatbots.

- Manufacturing: AI-powered robots and automation systems improve efficiency and reduce production costs.

- Retail: AI is used for personalized recommendations, inventory management, and customer experience enhancements.

- Transportation: Self-driving cars and AI-optimized logistics systems are transforming the transportation industry.

4. What Role Does Cybersecurity Play In Modern Computer Science Trends?

Cybersecurity plays a vital role in modern computer science trends by protecting data, ensuring privacy, and maintaining the integrity of systems and networks. As technology advances, so do the threats, making cybersecurity an essential component of any digital strategy.

- Data Protection: Cybersecurity measures protect sensitive data from unauthorized access and theft.

- Privacy Assurance: Cybersecurity practices ensure compliance with privacy regulations and protect personal information.

- System Integrity: Cybersecurity safeguards the integrity of computer systems and networks from malware and cyber attacks.

- Risk Management: Cybersecurity helps organizations identify and mitigate potential risks associated with cyber threats.

- Compliance: Cybersecurity ensures that organizations comply with industry standards and legal requirements.

5. What Is The Significance Of Cloud Computing In Current Technology Trends?

Cloud computing is significant in current technology trends because it provides scalable, cost-effective, and flexible IT resources, enabling businesses to innovate and grow without the constraints of traditional infrastructure. It supports various applications, from data storage to software development.

- Scalability: Cloud computing allows businesses to easily scale their IT resources up or down based on demand.

- Cost-Effectiveness: Cloud services eliminate the need for expensive hardware and reduce IT management costs.

- Flexibility: Cloud computing provides access to a wide range of services and tools, enabling businesses to adapt quickly to changing needs.

- Accessibility: Cloud-based applications and data can be accessed from anywhere with an internet connection.

- Innovation: Cloud platforms offer advanced technologies and development tools, fostering innovation and experimentation.

6. How Are Quantum Computing Advancements Shaping The Future Of Technology?

Quantum computing advancements are shaping the future of technology by offering the potential to solve complex problems that are beyond the capabilities of classical computers, impacting fields such as cryptography, drug discovery, and materials science. While still in its early stages, quantum computing promises to revolutionize various industries.

- Cryptography: Quantum computers can break current encryption algorithms, leading to the development of new quantum-resistant cryptography.

- Drug Discovery: Quantum simulations can accelerate the discovery of new drugs and therapies by accurately modeling molecular interactions.

- Materials Science: Quantum computing can aid in the design of new materials with specific properties, such as superconductivity.

- Optimization: Quantum algorithms can optimize complex systems, such as supply chains and financial models.

- Artificial Intelligence: Quantum computing can enhance machine learning algorithms, enabling faster and more accurate data analysis.

7. What Impact Does Edge Computing Have On Data Processing And Efficiency?

Edge computing has a significant impact on data processing and efficiency by bringing computation and data storage closer to the source of data, reducing latency, and enabling real-time processing. This is particularly important for applications that require quick response times, such as autonomous vehicles and IoT devices.

- Reduced Latency: Edge computing minimizes the distance data needs to travel, reducing latency and improving response times.

- Real-Time Processing: Edge devices can process data in real-time, enabling immediate insights and actions.

- Bandwidth Optimization: Edge computing reduces the amount of data that needs to be transmitted to the cloud, optimizing bandwidth usage.

- Enhanced Security: Edge devices can process sensitive data locally, reducing the risk of data breaches during transmission.

- Improved Reliability: Edge computing enables applications to continue functioning even when connectivity to the cloud is interrupted.

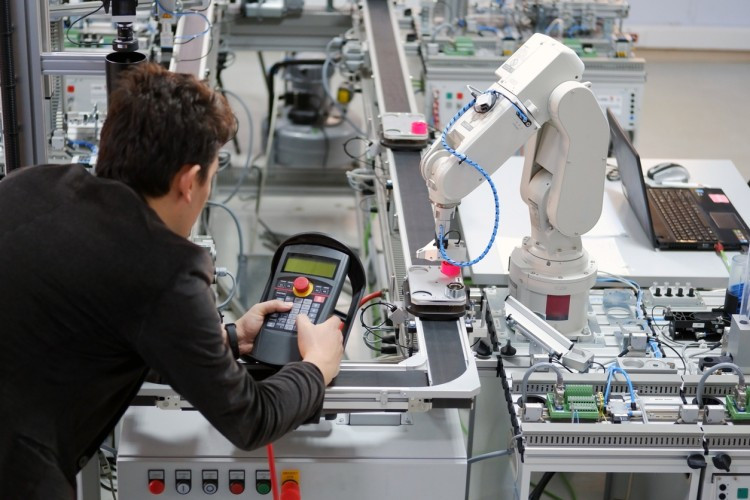

8. How Are Robotics And Automation Contributing To Technological Progress?

Robotics and automation are significantly contributing to technological progress by improving efficiency, increasing productivity, and reducing costs across various industries. From manufacturing to healthcare, robots and automated systems are transforming how tasks are performed.

- Increased Efficiency: Robots can perform tasks faster and more accurately than humans, increasing overall efficiency.

- Enhanced Productivity: Automated systems can operate 24/7, maximizing productivity and output.

- Reduced Costs: Automation reduces labor costs and minimizes errors, leading to significant cost savings.

- Improved Safety: Robots can perform dangerous tasks, reducing the risk of injury to human workers.

- Healthcare Advancements: Robots assist in surgeries, dispense medications, and provide support to patients.

9. What Skills Are Essential For Professionals In Computer Science Today?

Essential skills for professionals in computer science today include proficiency in programming languages, data analysis, cybersecurity, and cloud computing. These skills are necessary to stay competitive and contribute to the rapidly evolving technology landscape.

- Programming Languages: Proficiency in languages such as Python, Java, and C++ is essential for software development and data analysis.

- Data Analysis: Skills in data mining, statistical analysis, and machine learning are crucial for extracting insights from data.

- Cybersecurity: Knowledge of cybersecurity principles and practices is necessary to protect digital assets and information.

- Cloud Computing: Expertise in cloud platforms such as AWS, Azure, and Google Cloud is in high demand.

- Problem-Solving: Strong problem-solving skills are essential for identifying and addressing complex technical challenges.

10. What Future Trends Can We Anticipate In Computer Science And Technology?

We can anticipate several future trends in computer science and technology, including further advancements in AI, the integration of quantum computing, the expansion of edge computing, and the continued growth of IoT. These trends promise to transform industries and create new opportunities for innovation.

- Advancements in AI: AI will become more sophisticated and integrated into various aspects of our lives.

- Quantum Computing Integration: Quantum computers will begin to solve complex problems that are beyond the reach of classical computers.

- Expansion of Edge Computing: Edge computing will become more prevalent, enabling real-time processing and reducing latency.

- Growth of IoT: The number of connected devices will continue to grow, creating new opportunities for data collection and analysis.

- Sustainable Technology: Focus on developing environmentally friendly and sustainable technology solutions.

Computer Science Tech Trends

Computer Science Tech Trends

11. Artificial Intelligence (AI): The Intelligent Revolution

Artificial Intelligence (AI) is rapidly transforming industries by enabling machines to perform tasks that typically require human intelligence. This includes learning, problem-solving, and decision-making, making AI a pivotal trend in computer science.

- Definition: AI refers to the ability of machines to simulate human intelligence processes.

- Applications: AI is used in various applications, including healthcare, finance, transportation, and customer service.

- Machine Learning: A subset of AI that involves training machines to learn from data without explicit programming.

- Natural Language Processing (NLP): Enables machines to understand and process human language.

- Computer Vision: Allows machines to interpret and understand visual information.

According to research from Stanford University’s Department of Computer Science, AI could increase global GDP by 14% by 2030, indicating its transformative economic potential.

12. Quantum Computing: The Next Frontier

Quantum computing is an emerging field that leverages the principles of quantum mechanics to perform complex calculations far beyond the capabilities of classical computers. Quantum computing is poised to revolutionize fields like cryptography, drug discovery, and materials science, offering unprecedented computational power.

- Qubits: Quantum bits that can exist in multiple states simultaneously, unlike classical bits which are either 0 or 1.

- Superposition: The ability of a quantum bit to be in multiple states at the same time.

- Entanglement: A phenomenon where two quantum bits become correlated, allowing for simultaneous calculations.

- Quantum Algorithms: Algorithms designed to run on quantum computers, such as Shor’s algorithm for factoring large numbers.

- Potential Applications: Quantum computing has the potential to revolutionize cryptography, drug discovery, and materials science.

IBM is at the forefront of quantum computing research, with their quantum processors demonstrating the potential to solve previously unsolvable problems.

13. Edge Computing: Decentralizing Data Processing

Edge computing brings computation and data storage closer to the source of data, reducing latency and enabling real-time processing. Edge computing is particularly beneficial for applications that require quick response times, such as autonomous vehicles, IoT devices, and augmented reality.

- Definition: Edge computing involves processing data near the edge of the network, rather than relying on centralized data centers.

- Reduced Latency: By processing data locally, edge computing minimizes the time it takes for data to travel to and from the cloud.

- Bandwidth Efficiency: Edge computing reduces the amount of data that needs to be transmitted over the network, optimizing bandwidth usage.

- Enhanced Security: Processing data locally can improve security by reducing the risk of data breaches during transmission.

- Real-Time Applications: Edge computing enables real-time applications such as autonomous vehicles, smart cities, and industrial automation.

According to a report by Gartner, by 2025, 75% of enterprise-generated data will be processed at the edge, highlighting the growing importance of edge computing.

14. Robotics: Automating the Physical World

Robotics involves the design, construction, operation, and application of robots. These machines can automate tasks, improve efficiency, and enhance safety across various industries. As robots become more sophisticated and autonomous, they are transforming how we work and live.

- Definition: Robotics is the branch of engineering that deals with the design, construction, operation, and application of robots.

- Industrial Robots: Used in manufacturing to automate repetitive tasks, such as assembly and welding.

- Service Robots: Designed to assist humans in various tasks, such as cleaning, delivery, and healthcare.

- Autonomous Robots: Robots that can operate independently without human intervention, such as self-driving cars and drones.

- AI Integration: The integration of AI with robotics enhances the capabilities of robots, allowing them to learn and adapt to new environments.

Boston Dynamics is a leader in robotics innovation, developing robots that can perform complex tasks in challenging environments.

15. Internet of Things (IoT): Connecting the World

The Internet of Things (IoT) involves connecting everyday devices to the internet, enabling them to collect and exchange data. IoT is transforming industries by providing real-time insights, improving efficiency, and creating new opportunities for innovation.

- Definition: IoT refers to the network of physical devices, vehicles, home appliances, and other items embedded with electronics, software, sensors, and network connectivity.

- Smart Homes: IoT devices enable automation and control of home appliances, lighting, and security systems.

- Smart Cities: IoT technologies are used to improve traffic management, energy efficiency, and public safety.

- Industrial IoT (IIoT): IoT devices are used in manufacturing to monitor equipment, optimize processes, and improve productivity.

- Healthcare IoT: IoT devices enable remote patient monitoring, medication management, and personalized healthcare.

Cisco estimates that there will be over 50 billion connected devices by 2030, underscoring the massive potential of IoT.

16. Cybersecurity: Protecting Digital Assets

Cybersecurity is the practice of protecting computer systems, networks, and digital data from unauthorized access, theft, and damage. As technology advances, so do the threats, making cybersecurity a critical trend in computer science.

- Definition: Cybersecurity involves implementing measures to protect digital assets from cyber threats.

- Threat Landscape: Includes malware, phishing, ransomware, and distributed denial-of-service (DDoS) attacks.

- Security Measures: Firewalls, antivirus software, intrusion detection systems, and encryption.

- Cybersecurity Frameworks: NIST Cybersecurity Framework, ISO 27001, and GDPR.

- Importance: Protecting sensitive data, ensuring business continuity, and maintaining trust with customers.

Forbes reports that cybercrime is expected to cost the world $10.5 trillion annually by 2025, highlighting the growing need for robust cybersecurity measures.

17. Blockchain Technology: Secure and Transparent Transactions

Blockchain technology is a decentralized, distributed, and immutable ledger that records transactions in a secure and transparent manner. Blockchain is transforming industries by providing trust, transparency, and efficiency in various applications.

- Definition: Blockchain is a distributed ledger technology that records transactions in blocks that are linked together in a chain.

- Decentralization: Blockchain is not controlled by a single entity, making it more secure and resistant to censorship.

- Transparency: All transactions on a blockchain are publicly visible, providing transparency and accountability.

- Security: Cryptographic techniques ensure the security and integrity of blockchain data.

- Applications: Cryptocurrency, supply chain management, healthcare, and voting systems.

A report by PwC estimates that blockchain technology could boost global GDP by $1.76 trillion by 2030, underscoring its transformative potential.

18. Augmented Reality (AR) and Virtual Reality (VR): Enhancing User Experiences

Augmented Reality (AR) and Virtual Reality (VR) are technologies that enhance user experiences by overlaying digital information onto the real world (AR) or creating immersive, simulated environments (VR). AR and VR are transforming industries such as gaming, entertainment, education, and healthcare.

- Augmented Reality (AR): Overlays digital information onto the real world, enhancing the user’s perception of reality.

- Virtual Reality (VR): Creates immersive, simulated environments that users can interact with.

- Gaming: AR and VR provide immersive gaming experiences, enhancing gameplay and user engagement.

- Education: AR and VR enable interactive learning experiences, making education more engaging and effective.

- Healthcare: AR and VR are used for medical training, patient rehabilitation, and pain management.

According to a report by Statista, the global AR and VR market is projected to reach $300 billion by 2024, highlighting the rapid growth of these technologies.

19. Cloud Computing: On-Demand IT Resources

Cloud computing provides on-demand access to computing resources—such as servers, storage, and software—over the internet. Cloud computing is transforming industries by providing scalable, cost-effective, and flexible IT solutions.

- Definition: Cloud computing involves delivering computing services over the internet.

- Infrastructure as a Service (IaaS): Provides access to virtualized computing resources, such as servers and storage.

- Platform as a Service (PaaS): Offers a platform for developing, running, and managing applications.

- Software as a Service (SaaS): Delivers software applications over the internet on a subscription basis.

- Benefits: Scalability, cost-effectiveness, flexibility, and accessibility.

Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are the leading cloud providers, offering a wide range of services and solutions.

20. Data Science and Analytics: Extracting Insights from Data

Data science and analytics involve collecting, processing, and analyzing large datasets to extract meaningful insights and support decision-making. Data science is transforming industries by enabling businesses to make data-driven decisions, improve efficiency, and create new products and services.

- Definition: Data science is an interdisciplinary field that uses scientific methods, algorithms, and systems to extract knowledge and insights from data.

- Data Mining: Discovering patterns and relationships in large datasets.

- Statistical Analysis: Using statistical methods to analyze data and draw conclusions.

- Machine Learning: Developing algorithms that can learn from data and make predictions.

- Applications: Business intelligence, fraud detection, healthcare analytics, and marketing optimization.

A report by McKinsey & Company estimates that data-driven organizations are 23 times more likely to acquire customers and 6 times more likely to retain them, underscoring the value of data science.

These trends are not just technological advancements; they represent fundamental shifts in how we approach problem-solving, innovation, and value creation. Staying informed about these developments is crucial for anyone involved in computer science and technology. At pioneer-technology.com, we strive to provide you with the most current and insightful analysis of these trends.

Navigating the ever-changing landscape of computer science and technology can be challenging, but you don’t have to do it alone. Visit pioneer-technology.com today to explore in-depth articles, expert analysis, and the latest updates on these groundbreaking innovations. Stay ahead of the curve and unlock the potential of tomorrow’s technology with us!

Address: 450 Serra Mall, Stanford, CA 94305, United States

Phone: +1 (650) 723-2300

Website: pioneer-technology.com

FAQ: Trends in Computer Science and Technology

1. What are the top trends in computer science right now?

AI, quantum computing, edge computing, robotics, IoT, cybersecurity, blockchain, AR/VR, cloud computing, and data science are some of the top trends in computer science right now.

2. How is AI impacting the tech industry?

AI is automating tasks, enhancing decision-making, and creating new products and services across various industries, leading to significant improvements in efficiency and innovation.

3. What is quantum computing, and why is it important?

Quantum computing uses quantum mechanics to solve complex problems beyond classical computers’ capabilities, impacting fields like cryptography, drug discovery, and materials science.

4. What is edge computing, and how does it improve data processing?

Edge computing processes data closer to its source, reducing latency and enabling real-time processing, which is crucial for applications like autonomous vehicles and IoT devices.

5. How are robots being used in different industries?

Robots are used in manufacturing for automation, in healthcare for assistance, and in logistics for delivery, improving efficiency and safety in various sectors.

6. What is the Internet of Things (IoT), and how is it changing our lives?

IoT connects everyday devices to the internet, enabling data collection and exchange, which is transforming industries like smart homes, smart cities, and industrial automation.

7. Why is cybersecurity so critical in today’s digital world?

Cybersecurity protects computer systems, networks, and data from cyber threats, ensuring business continuity, maintaining trust, and safeguarding sensitive information.

8. How does blockchain technology ensure secure transactions?

Blockchain is a decentralized ledger that records transactions securely and transparently, providing trust and efficiency in applications like cryptocurrency and supply chain management.

9. What are the applications of augmented reality (AR) and virtual reality (VR)?

AR and VR enhance user experiences in gaming, entertainment, education, and healthcare by overlaying digital information onto the real world or creating immersive simulated environments.

10. What are the benefits of using cloud computing services?

Cloud computing offers scalability, cost-effectiveness, flexibility, and accessibility, enabling businesses to innovate and grow without the constraints of traditional IT infrastructure.

11. How does data science help businesses make better decisions?

Data science extracts insights from large datasets, enabling businesses to make data-driven decisions, improve efficiency, and create new products and services.