Do you ever wonder what technology search engines use to ‘crawl’ websites? The answer lies in web crawlers, also known as spiders or bots, which are at the heart of how search engines like Google discover and index the vast expanse of the internet. At pioneer-technology.com, we’ll explore these fascinating tools, delving into their functionalities and how they impact your website’s visibility. Discover the inner workings of search engine technology and learn how to optimize your site for better search engine rankings.

1. What Are Web Crawlers (Search Engine Bots)?

Web crawlers, also called spiders or bots, are automated software programs that search engines like Google and Bing use to explore and analyze the content of websites. These bots systematically visit web pages, read their content, and follow links to discover new pages. By understanding the information on each page, search engines can accurately retrieve relevant results when users search for specific topics. These programs are essential for search engines to maintain an up-to-date index of the internet.

1.1. Why Are Web Crawlers Important?

Web crawlers are crucial for several reasons:

- Discovery: They find new and updated content across the web.

- Indexing: They categorize and store information from web pages in a searchable format.

- Ranking: They help search engines determine the relevance and quality of web pages for specific search queries.

Without web crawlers, search engines would be unable to deliver accurate and comprehensive search results.

1.2. How Do Web Crawlers Work?

Web crawlers operate by:

- Starting with a list of known URLs: These are often popular websites or URLs submitted by website owners.

- Visiting each URL: The crawler requests the page from the web server.

- Analyzing the content: The crawler extracts text, images, and other media.

- Following links: The crawler identifies and follows hyperlinks to discover new URLs.

- Adding content to the index: The extracted information is stored in the search engine’s index.

- Repeating the process: The crawler continuously revisits pages to check for updates and new content.

This continuous process ensures that search engines have a comprehensive and up-to-date understanding of the web.

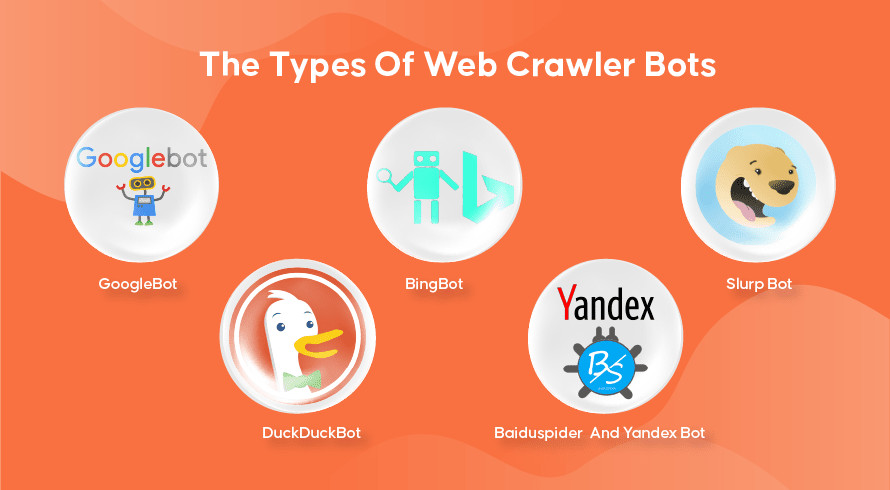

2. What Are the Different Types of Web Crawlers?

Different Web Crawler Bots and their Functions | pioneer-technology.com

Different Web Crawler Bots and their Functions | pioneer-technology.com

Different search engines use various web crawlers tailored to their specific needs. Here’s a look at some of the most prominent ones:

2.1. Googlebot

Googlebot is the web crawler used by Google, the world’s most popular search engine. It comes in two main versions:

- Googlebot Desktop: Simulates a user browsing the web on a desktop computer.

- Googlebot Mobile: Simulates a user browsing the web on a smartphone.

Googlebot is highly efficient at crawling and indexing vast amounts of web content. However, it can sometimes struggle with complex website structures and may take time to index newly published pages. According to research from Stanford University’s Department of Computer Science, Googlebot indexes hundreds of billions of web pages.

2.2. Bingbot

Bingbot is Microsoft’s web crawler for the Bing search engine. It functions similarly to Googlebot, analyzing web pages and following links. Bingbot also includes a fetching tool that allows website owners to see how the bot crawls their pages, helping identify potential issues.

2.3. Slurp Bot

Slurp Bot is Yahoo’s web crawler. While Yahoo also uses Bingbot for its search results, Slurp Bot is still used to gather content for Yahoo’s partner sites, including Yahoo News, Yahoo Sports, and Yahoo Finance. Website owners need to allow Slurp Bot access to have their content appear on Yahoo Mobile search results.

2.4. DuckDuckBot

DuckDuckBot is the web crawler for DuckDuckGo, a search engine known for its commitment to user privacy. DuckDuckGo does not track user activity and provides search results from its own crawler, as well as crowd-sourced websites like Wikipedia and other search engines.

2.5. Baiduspider and Yandex Bot

Baiduspider is the web crawler used by Baidu, the dominant search engine in China. Yandex Bot is used by Yandex, the leading search engine in Russia. Baidu has over 80% share of the search engine market in mainland China.

2.6. Other Specialized Crawlers

In addition to the major search engine crawlers, there are also specialized crawlers that focus on specific types of content or websites, such as:

- Image crawlers: Find and index images.

- Video crawlers: Find and index videos.

- News crawlers: Focus on news websites and articles.

- Academic crawlers: Search for scholarly articles and research papers.

These specialized crawlers help search engines provide more targeted and relevant results for specific types of queries.

3. How Do Web Crawling, Search Indexing, and Search Engine Ranking Work Together?

Search engines rely on a combination of web crawling, search indexing, and search engine ranking to deliver relevant and useful search results. Here’s how these processes work together:

3.1. Web Crawling: Discovering Content

Web crawling is the initial stage where bots find new and updated content on websites. The process involves:

- Starting with known URLs: Crawlers begin with a set of URLs to visit.

- Visiting web pages: Crawlers access the content of each web page.

- Following hyperlinks: Crawlers follow links on the page to discover new URLs and content.

This continuous process allows search engines to explore and discover the vast amount of information available on the internet.

3.2. Search Engine Indexing: Organizing Content

After discovering content through web crawling, search engines add it to a massive database called a search engine index. This index is like a library where web pages are organized for easy retrieval. The indexing process involves:

- Analyzing content: Extracting text, images, and metadata (data about data) from web pages.

- Storing information: Saving the extracted information in a structured format.

- Organizing the index: Categorizing and organizing web pages based on their content and metadata.

Metadata, such as meta titles and meta descriptions, helps search engines understand the content and context of a web page. According to research from the University of California, Berkeley’s School of Information, effective metadata usage significantly improves search engine indexing accuracy.

3.3. Search Ranking: Delivering Relevant Results

When a user enters a search query, the search engine checks its index to find the most relevant information. The search ranking process involves:

- Matching the query: Identifying web pages that match the user’s search query.

- Evaluating relevance: Assessing the relevance and quality of each matching web page.

- Ranking results: Ordering the web pages based on their relevance and quality.

- Presenting results: Displaying the ranked list of web pages in the search engine results pages (SERPs).

Search algorithms use various factors to determine the ranking of web pages, including metadata, website authority, keywords, and links.

4. How Does Search Engine Optimization (SEO) Fit Into the Picture?

Search Engine Optimization (SEO) is the practice of optimizing website content to improve its visibility in search engine results. By implementing effective SEO strategies, website owners can increase their chances of ranking higher in the SERPs and attracting more organic traffic.

4.1. On-Page Optimization

On-page optimization involves optimizing the content and structure of individual web pages to make them more search engine friendly. Key on-page optimization techniques include:

- Keyword research: Identifying relevant keywords that users are searching for.

- Content creation: Creating high-quality, informative, and engaging content that incorporates target keywords.

- Meta tag optimization: Crafting compelling meta titles and meta descriptions that accurately reflect the content of the page.

- Header tag optimization: Using header tags (H1, H2, H3, etc.) to structure content and highlight important keywords.

- Image optimization: Optimizing images with descriptive alt text and appropriate file names.

- Internal linking: Linking to other relevant pages on the website to improve navigation and distribute link equity.

4.2. Off-Page Optimization

Off-page optimization involves building a website’s authority and reputation through external factors. Key off-page optimization techniques include:

- Link building: Acquiring high-quality backlinks from reputable websites.

- Social media marketing: Promoting content on social media platforms to increase visibility and engagement.

- Online reputation management: Monitoring and managing a website’s online reputation to ensure a positive image.

4.3. Technical SEO

Technical SEO focuses on optimizing the technical aspects of a website to improve its crawlability and indexability. Key technical SEO techniques include:

- Website architecture: Designing a clear and logical website structure.

- Robots.txt optimization: Configuring the robots.txt file to control which pages search engines can crawl.

- Sitemap creation: Creating an XML sitemap to help search engines discover and index all the pages on the website.

- Mobile-friendliness: Ensuring that the website is mobile-friendly and responsive.

- Page speed optimization: Improving the loading speed of web pages.

- Structured data markup: Adding structured data markup to provide search engines with more information about the content on the page.

By implementing a comprehensive SEO strategy that encompasses on-page, off-page, and technical optimization, website owners can significantly improve their search engine rankings and attract more organic traffic.

5. How Can You Tell Search Engines How to Crawl Your Website?

Website owners can communicate with search engines and provide instructions on how to crawl their websites using various methods:

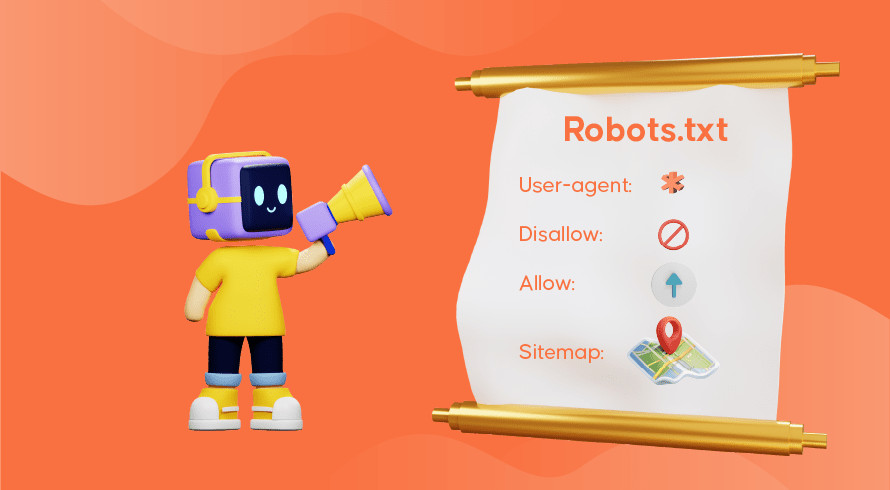

5.1. Robots.txt File

The robots.txt file is a text file located in the root directory of a website that tells web crawlers which pages to crawl and which to ignore. It’s a crucial tool for controlling how search engines access and index your site.

Robots.txt File Directives for Web Crawlers | pioneer-technology.comHere are some common uses for the robots.txt file:

Robots.txt File Directives for Web Crawlers | pioneer-technology.comHere are some common uses for the robots.txt file:

- Preventing crawling of specific pages: You can block crawlers from accessing pages that are under development, contain sensitive information, or are duplicates of other pages.

- Controlling crawling of directories: You can prevent crawlers from accessing entire directories on your website.

- Specifying crawl delay: You can set a crawl delay to prevent crawlers from overloading your server.

- Pointing to the sitemap: You can use the robots.txt file to point crawlers to your XML sitemap.

If a robots.txt file is not present, Googlebot will proceed to crawl the website in full. However, if the file is present but contains errors, it may not crawl the website correctly. According to a study by Moz, properly configured robots.txt files can significantly improve website crawl efficiency.

5.2. Meta Robots Tag

The meta robots tag is an HTML tag that provides instructions to search engine crawlers on how to handle a specific web page. It is placed in the <head> section of the HTML code.

Here are some common meta robots tag directives:

- index/noindex: Specifies whether the page should be indexed or not.

- follow/nofollow: Specifies whether the crawler should follow links on the page or not.

- noarchive: Prevents search engines from displaying a cached copy of the page.

- nosnippet: Prevents search engines from displaying a snippet of the page in search results.

- noimageindex: Prevents search engines from indexing images on the page.

5.3. XML Sitemap

An XML sitemap is a file that lists all the URLs on a website and provides information about each URL, such as when it was last updated and how often it is likely to change. Sitemaps help search engines discover and crawl all the pages on a website, especially if the website has a complex structure or many orphaned pages (pages that are not linked to from other pages).

5.4. Using the Google Search Console

Google Search Console is a free tool provided by Google that allows website owners to monitor and manage their website’s presence in Google search results. It provides valuable insights into how Google crawls and indexes your website, and allows you to submit sitemaps, request indexing of individual URLs, and identify and fix crawling errors.

By using these methods, website owners can effectively communicate with search engines and ensure that their websites are crawled and indexed correctly.

6. What Are the Challenges of Web Crawling?

Web crawling presents several challenges for search engines and website owners:

6.1. Scale and Complexity

The sheer size and complexity of the internet pose a significant challenge for web crawlers. With billions of web pages and new content being added constantly, crawlers must be able to efficiently process and index vast amounts of information.

6.2. Dynamic Content

Many websites use dynamic content, which is generated in real-time based on user interactions or other factors. Crawling dynamic content can be challenging because the content may change each time the crawler visits the page.

6.3. Crawl Traps

Crawl traps are website structures that can cause web crawlers to get stuck in an infinite loop, consuming excessive resources and preventing them from crawling other parts of the website. Common crawl traps include:

- Calendar pages: Dynamically generated calendar pages with links to previous and next months can create an endless loop.

- Session IDs: URLs with unique session IDs can lead to duplicate content and crawling inefficiencies.

- Infinite redirect loops: Redirects that point back to the same page can trap crawlers in a loop.

6.4. Website Performance

Excessive crawling can put a strain on website servers and negatively impact website performance. Website owners need to manage crawl rates to ensure that crawlers don’t overload their servers.

6.5. Respecting Website Rules

Web crawlers must respect the rules set by website owners in the robots.txt file and other directives. Ignoring these rules can lead to legal issues and damage the relationship between search engines and website owners.

Despite these challenges, web crawling remains a critical process for search engines to discover, index, and rank web content.

7. How Can Pioneer-Technology.com Help You Understand Web Crawling?

At pioneer-technology.com, we understand that keeping up with the ever-evolving world of technology can be daunting. That’s why we strive to provide clear, concise, and informative content about web crawling and other cutting-edge technologies.

7.1. Expert Insights

Our team of technology experts provides in-depth analysis and insights into the latest trends and developments in web crawling technology. We break down complex concepts into easy-to-understand language, making it accessible to everyone.

7.2. Practical Tips

We offer practical tips and strategies for website owners to optimize their websites for web crawlers, improve their search engine rankings, and attract more organic traffic. Whether you’re a seasoned SEO professional or just starting out, you’ll find valuable information on our website.

7.3. Up-to-Date Information

We stay on top of the latest algorithm updates and best practices in the SEO industry. You can rely on pioneer-technology.com to provide you with the most current and accurate information about web crawling and SEO.

7.4. Call to Action

Ready to dive deeper into the world of technology? Visit pioneer-technology.com today to explore our extensive library of articles, tutorials, and resources. Stay ahead of the curve and unlock the power of web crawling and SEO! If you have any questions or would like to learn more about our services, don’t hesitate to contact us at Address: 450 Serra Mall, Stanford, CA 94305, United States. Phone: +1 (650) 723-2300.

8. What Are the Future Trends in Web Crawling?

The field of web crawling is constantly evolving to keep pace with the changing landscape of the internet. Here are some key trends to watch out for in the future:

8.1. AI-Powered Crawling

Artificial intelligence (AI) is playing an increasingly important role in web crawling. AI-powered crawlers can learn from data, adapt to changing website structures, and identify relevant content more effectively.

8.2. Semantic Web Crawling

Semantic web crawling focuses on understanding the meaning and context of web content, rather than just extracting keywords. This allows search engines to provide more accurate and relevant search results.

8.3. Mobile-First Crawling

With the increasing popularity of mobile devices, search engines are prioritizing mobile-first crawling. This means that they primarily use the mobile version of a website to index and rank content.

8.4. Voice Search Optimization

As voice search becomes more prevalent, website owners need to optimize their content for voice queries. This involves using natural language and conversational keywords in their content.

8.5. Ethical Crawling

Ethical crawling is becoming increasingly important as website owners and search engines strive to create a more sustainable and responsible web ecosystem. This involves respecting website rules, minimizing server load, and avoiding crawl traps.

9. What Are Some Common Web Crawling Mistakes to Avoid?

To ensure that your website is crawled and indexed correctly, it’s important to avoid these common web crawling mistakes:

9.1. Blocking Important Pages in Robots.txt

Accidentally blocking important pages in your robots.txt file can prevent search engines from indexing them, leading to a loss of organic traffic.

9.2. Creating Crawl Traps

Creating crawl traps can cause web crawlers to get stuck in an infinite loop, consuming excessive resources and preventing them from crawling other parts of your website.

9.3. Ignoring Mobile-Friendliness

Ignoring mobile-friendliness can negatively impact your search engine rankings, as search engines prioritize mobile-friendly websites.

9.4. Having Slow Page Speed

Having slow page speed can frustrate users and lead to higher bounce rates, which can negatively impact your search engine rankings.

9.5. Not Submitting a Sitemap

Not submitting a sitemap can make it difficult for search engines to discover and crawl all the pages on your website, especially if your website has a complex structure.

10. FAQ About Web Crawling

Here are some frequently asked questions about web crawling:

10.1. How Do Search Engines Crawl My Website?

Search engines use web crawlers, also known as spiders or bots, to discover new and updated content on websites. These crawlers follow links from page to page, indexing the content they find.

10.2. What Are the Most Popular Search Engine Bots?

Googlebot Desktop and Googlebot Mobile are the most popular web crawlers, followed by Bingbot, Slurp Bot, and DuckDuckBot. Baiduspider is used mainly in China, while Yandex Bot is used in Russia.

10.3. How Can I See How Google Crawls My Website?

You can use the Google Search Console to see how Google crawls your website. This tool provides information about crawling errors, indexed pages, and other important metrics.

10.4. How Often Do Search Engines Crawl My Website?

The frequency with which search engines crawl your website depends on several factors, including the size of your website, the frequency of updates, and the authority of your website.

10.5. How Can I Improve My Website’s Crawlability?

You can improve your website’s crawlability by:

- Creating a clear and logical website structure.

- Submitting an XML sitemap to search engines.

- Optimizing your robots.txt file.

- Ensuring that your website is mobile-friendly.

- Improving your website’s page speed.

10.6. What Is the Difference Between Crawling and Indexing?

Crawling is the process of discovering new and updated content on websites. Indexing is the process of storing and organizing that content in a search engine’s database.

10.7. What Is a Crawl Budget?

A crawl budget is the number of pages that a search engine crawler will crawl on a website within a given timeframe. Search engines allocate a crawl budget to each website based on its authority, size, and other factors.

10.8. How Can I Optimize My Crawl Budget?

You can optimize your crawl budget by:

- Fixing crawling errors.

- Removing duplicate content.

- Blocking low-value pages in robots.txt.

- Improving website performance.

- Creating a clear and logical website structure.

10.9. What Is the Impact of Crawling on SEO?

Crawling is essential for SEO because it allows search engines to discover and index your website’s content. If your website is not crawled and indexed correctly, it will not appear in search results.

10.10. Where Can I Learn More About Web Crawling?

You can learn more about web crawling by visiting pioneer-technology.com and exploring our extensive library of articles, tutorials, and resources.

By understanding how web crawling works and implementing best practices, you can ensure that your website is crawled and indexed correctly, leading to improved search engine rankings and more organic traffic.